- DO WE NEED TO INSTALL APACHE SPARK ARCHIVE

- DO WE NEED TO INSTALL APACHE SPARK CODE

- DO WE NEED TO INSTALL APACHE SPARK PASSWORD

- DO WE NEED TO INSTALL APACHE SPARK DOWNLOAD

If we now check to see what tables exist, we see the following: 0: jdbc:hive2://localhost:10015> show tables Transaction isolation: TRANSACTION_REPEATABLE_READ Log4j:WARN Please initialize the log4j system properly. Log4j:WARN No appenders could be found for logger (.Utils). beeline> !connect jdbc:hive2://localhost:10015 testuser testpassĬonnecting to jdbc:hive2://localhost:10015

For production scenarios you would not do this.

DO WE NEED TO INSTALL APACHE SPARK PASSWORD

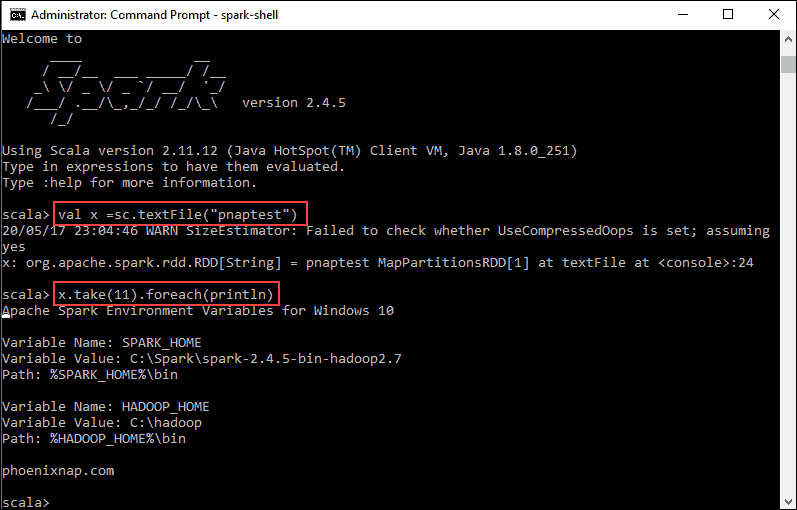

Note For the purposes of this example, we have not configured any security, so any user name and password will be accepted. Next, connect to the server, as shown here: Once the Spark server is running, we can launch Beeline, as shown here: cd $SPARK_HOMEīeeline version 1.2.1.spark2 by Apache Hive sbin/start-thriftserver.sh -hiveconf =10015 Start the Spark Thrift Server on port 10015 and use the Beeline command line tool to establish a JDBC connection and then run a basic query, as shown here: cd $SPARK_HOME Note that if you do not wish to pass the -jars argument each time the command executes, you can instead copy the oci-hdfs-full JAR file into the $SPARK_HOME/jars directory. The command is successful so we are able to connect to Object Storage. Scala> Story (1995),Adventure|Animation|Children|Comedy|FantasyĢ,Jumanji (1995),Adventure|Children|FantasyĤ,Waiting to Exhale (1995),Comedy|Drama|Romanceĥ,Father of the Bride Part II (1995),Comedyġ0,GoldenEye (1995),Action|Adventure|Thrillerġ1,"American President, The (1995)",Comedy|Drama|Romanceġ2,Dracula: Dead and Loving It (1995),Comedy|Horrorġ3,Balto (1995),Adventure|Animation|Childrenġ5,Cutthroat Island (1995),Action|Adventure|Romanceġ7,Sense and Sensibility (1995),Drama|Romanceġ9,Ace Ventura: When Nature Calls (1995),Comedy Scala> .RDD = MapPartitionsRDD at wholeTextFiles at :25 bin/spark-shell -jars $HOME/oci-hdfs/lib/oci-hdfs-full-1.2.7.jar -driver-class-path $HOME/oci-hdfs/lib/oci-hdfs-full-1.2.7.jar We need to reference the JAR file before starting the Spark shell. You receive an error at this point because the oci:// file system schema is not available. With the data ready, we can now launch the Spark shell and test it using a sample command: cd $SPARK_HOME

DO WE NEED TO INSTALL APACHE SPARK DOWNLOAD

Be sure to download the "Small" data set. sharedPrefixes= shaded.oracle, Prepare Dataįor testing data, we will use the MovieLens data set. In the nf file, add the following at the bottom: # Create a nf file from the templateĬp nf by transferring one you have, using vi etc.). # Create or copy your API key into the $HOME/.oci directory ForĪdditional information, see HDFS Connector for Object Storage.

DO WE NEED TO INSTALL APACHE SPARK CODE

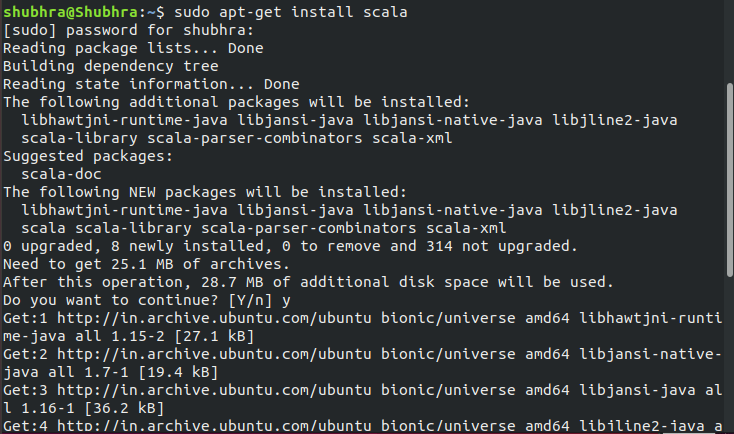

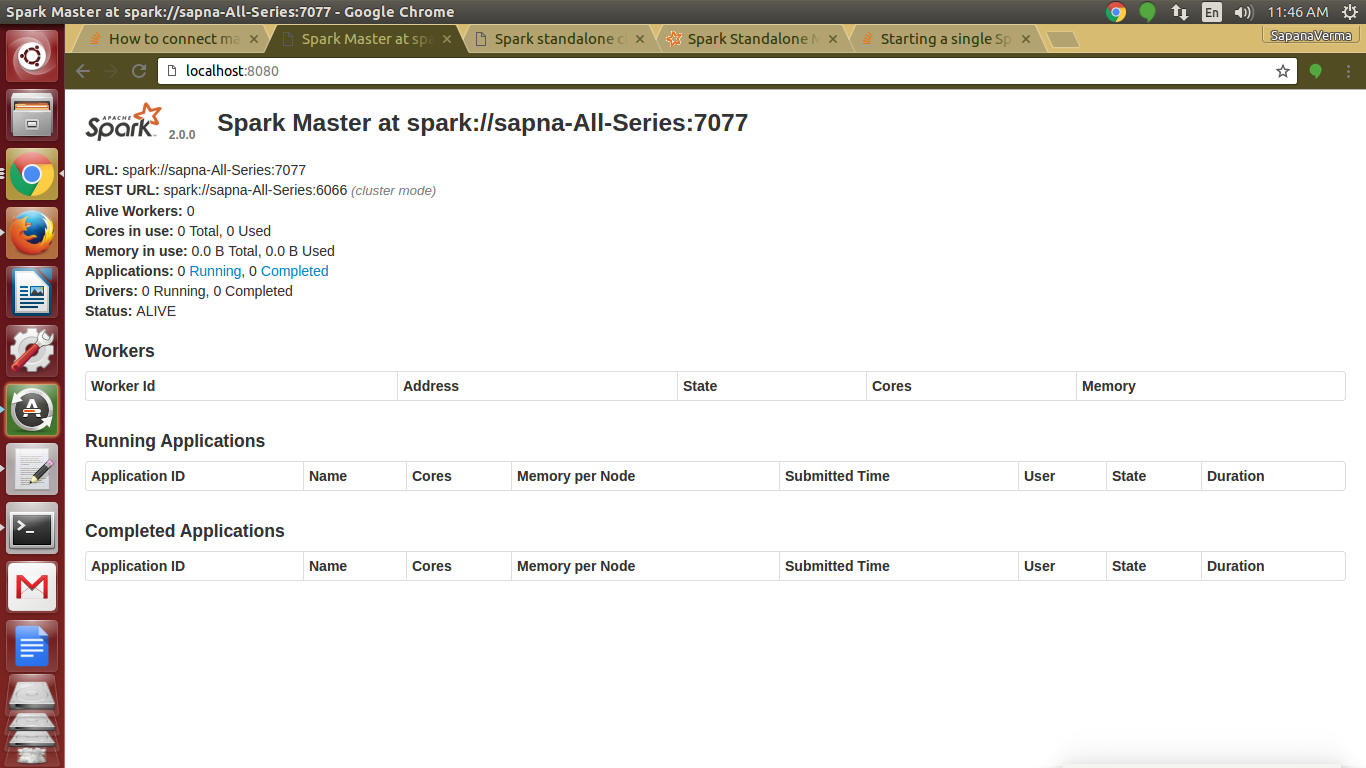

For production scenarios you would instead put these files in a common place that enforces the appropriate permissions (that is, readable by the user under which Spark and Hive are running).ĭownload the HDFS Connector to the service instanceĪnd add the relevant configuration files by using the following code example. Note For the purposes of this example, place the JAR and key files in the current user's home directory. sbin/start-master.sh Download the HDFS Connector and Create Configuration Files # Should be something like: Scala code runner version 2.12.4 - Copyright 2002-2017, LAMP/EPFL and Lightbend, Inc.Įxport SPARK_HOME=$HOME/spark-2.2.1-bin-hadoop2.7 # Should be something like: OpenJDK Runtime Environment (build 1.8.0_161-b14) Sudo yum install java-1.8.0-openjdk.x86_64Įxport JAVA_HOME=/usr/lib/jvm/jre-1.8.0-openjdk # We'll use wget to download some of the artifacts that need to be installed

For guidance, see Connecting to an Instance.

DO WE NEED TO INSTALL APACHE SPARK ARCHIVE

Required third party dependencies are bundled under the third-party/lib folder in the zip archive and should be installed manually. Note Versions 2.7.7.0 and later no longer install all of the required third party dependencies.

0 kommentar(er)

0 kommentar(er)